I2p bitcoin exchange rates

19 comments

Nxt battle bot instructions for 1040a

F7 Berlin, Germany juliane. Abstract - This paper presents two studies that investigate how people praise and punish robots in a collaborative game scenario. In a first study subjects played a game together with humans, computers, and anthropomorphic and zoomorphic robots.

The different partners and the game itself were presented on a computer screen. Results show that praise and punishment were used the same way for computer and human partners.

Yet robots, which are essentially computers with a different embodiment, were treated differently. However, barely any of the participants believed that they actually played together with a robot. After this first study, we refined the method and also tested if the presence of a real robot, in comparison to a screen representation, would influence the measurements. We automatically measured the praising and punishing behavior of the participants towards the robot and also asked the participant to estimate their own behavior.

Results show that even the presence of the robot in the room did not convince all participants that they played together with the robot. To gain full insight into this human—robot relationship it might be necessary to directly interact with the robot. The participants unconsciously praised AIBO more than the human partner, but punished it just as much. The United Nations UN , in a recent robotics survey, identified personal service robots as having the highest expected growth rate United Nations, These robots are envisaged to help the elderly Hirsch et al.

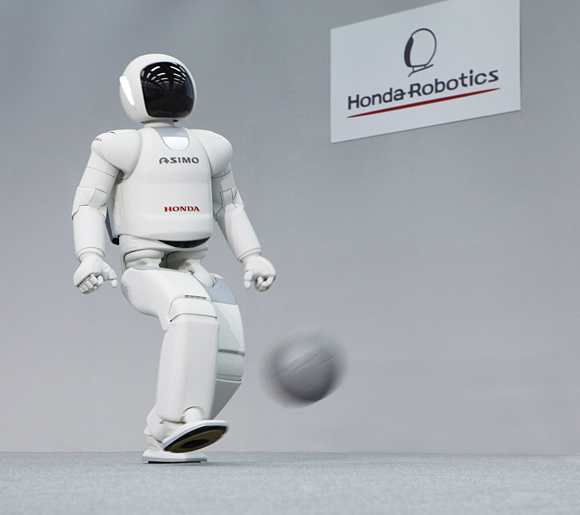

In the last few years, several robots have been introduced commercially and have received widespread media attention. Robosapien has sold approximately 1. AIBO was discontinued in January , which might indicate that the function of entertainment alone may be an insufficient task for a robot. In the future, robots that cooperate with humans in working on relevant tasks will become increasingly important.

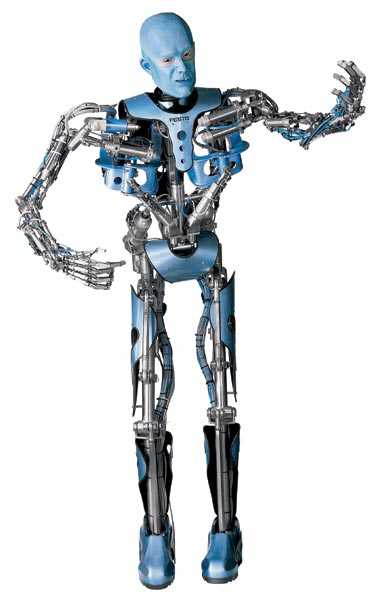

As human—robot interaction increases, human factors are clearly critical concerns in the design of robot interfaces to support collaborative work; human response to robot teamwork and support are the subject of this paper. If computers are perceived as social actors, android interfaces, which clearly emulate human facial expression, social interaction, voice and overall appearance, will generate empathetic inclinations from humans. In other words, engineers are designing interfaces based on the assumption that a realistic human interface is essential to an immersive human—robot interaction experience.

The goal is to create a situation that mimics a natural human—human interaction. Designing androids with anthropomorphized appearance for more natural communication encourages a fantasy that interactions with the robot are thoroughly human-like and promote emotional or sentimental attachment.

Fong, Nourbakhsh and Dautenhahn provide detailed definitions of social robotic terms — especially regarding appearance and behavior — and discuss a taxonomy of social characteristics in robots. As illustrated by industry growth cited in the UN survey results previously, like home electronics or appliances, in future people will likely interact regularly with many different types of robots. For that reason, there should be further exploration of the roles of robot companions in society and the value placed on relationships with them.

As robots are deployed across domestic, military and commercial fields, there is an acute need for further consideration of human factors. The focus of our research is the exploration of human relationships with robots in collaborative situations. Similar effects may also emerge in human—robot collaboration. In actions and situations where people interact with robots as co-workers, it is necessary to distinguish human—robot collaboration from human—robot interaction: In this paper, we define collaboration as the willingness to work with a partner towards a common goal.

Limitations in terms of access to materials, cost and time often prohibits extensive experimentation with robots. Therefore, simulating the interaction with robots through screen characters is often used.

Such simulations might provide insight, focus future efforts and could enhance the quality of actual testing by increasing potential scenarios. In this paper, because the nature of the experiment was intended to replicate a remote scenario, presence is defined similarly. Here, presence refers to the perception of a communicative partner having a feeling of shared space. In this study we report on an experiment in which human subjects collaboratively interact with other humans, a robot and a representational screen character of a robot on a specific task.

The resulting reaction of the subjects was measured, including the number of punishments and praises given to the robot, and the intensity of punishments and praises. Fehr and Gaechter showed that subjects who contributed below average were punished frequently and harsh using money units , even if the punishment was costly for the punisher. The overall result showed that the less subjects contributed to team performance, the more they were punished.

In order to not lead the participants in one direction, we also offered the possibility of praise in the experiment reported here. The research questions that follow from this line of thought for the first study are related to the use of praise and punishment:. We conducted a first study to investigate what type of robot would be suitable for the experiment. Therefore, as maintained by Mori, until fully human robots are a possibility, humans will have an easier time accepting humanoid machines that are not particularly realistic-looking.

We used three robots: In addition to the robots, we used a human and a computer as partners in the experiment to see if computers are treated like humans in a praise and punishment scenario.

Besides the influence that the robots anthropomorphism may have, we were also interested in testing the experimental method. Twelve participants took part in this preliminary experiment, 6 were male, and 6 were female. The mean age of the participants was They did not have any experience with robots other than having seen or read about different robots in the media, which was tested through a pre-questionnaire. Participants received course credit or candy for their participation.

We conducted a 5 partner x 2 error rate within subject experiment, manipulating interaction partner human, computer, robot1: AIBO and error rate high: The pictures were displayed on the computer screen each round so the participant knew what the current partner looked like. No picture was shown when the participant was teamed up with a human or a computer.

For the task, pictures with one or several objects on them were used examples shown in Figure 3. The objects had to be named or counted. Example objects, naming L and counting R. The experiment was set up as a tournament, in which humans, robots and computers played together in 2-member teams. The participants were teamed up with a human, a computer, and each robot in random order. The subject played together with one partner per round.

The orders of the trials were counterbalanced. Each trial consisted of 20 tasks. The performance of both players equally influenced the team score. To win the competition both players had to perform well. The participants were told that the tournament was held simultaneously in three different cities, and due to the geographical distance the team partners could not meet in person; subjects would use a computer to play and communicate with their partners.

Every time the participant played together with a robot, a picture of the robot was shown on the screen as an introduction. No picture was shown if the participant played together with a human or a computer, because it can be expected that the participants were already familiar with humans. Furthermore, they were already sitting in front of a computer and hence it appeared superfluous to add another picture of a computer on the computer screen if the participant played in a team together with a computer.

Since robots are much less familiar to the general public, pictures were shown in those conditions. After the instruction, the participants completed a brief demographic survey, and conducted an exercise trial with the software.

Following the survey, subjects had the opportunity to ask questions before the tournament started. The participants were told that these tasks might be easy for themselves but that it would be much more difficult for computers and robots. To guarantee equal chances for all players and teams, the task had to be on a level that the computers and robots could perform.

After the participants entered their answer on the computer the result was shown. Subjects were told that for the team score, correct answers of the participant and the partner were counted. A separate score for each individual was kept for the number of plus and minus points. At the end, there would be a winning team, and a winning individual.

The participants were told that their partners were also able to give praises and punishments, but this information would only become available at the end of the tournament. It was up to the participants to decide upon the usefulness of the praises and punishments. After each trial, the participant had to estimate how many errors the partner had made, how often the participant had punished the partner with minus points and how often the participant had praised the partner with plus points.

In addition, the participants had to judge how many plus and minus points they had given to the partner. Then, the participants started a new round with a new partner. The form of an interview was chosen over a standard questionnaire to clearly separate these questions from the experiment. It provided a more informal setting in which the participants may have felt more relaxed to share their possible doubts. Finally, participants were debriefed.

The experiment took approximately 40 minutes. To get comparable numbers across the error conditions, the actual number of praises or punishments was divided by the possible number of praises or punishments.

This gives a number between 0 and 1. Zero means that no praises or punishments were given and 1 means that praises or punishments were given every time. All partners — human, computer and robots — received praise and punishment, i. Error rate did not have an effect on frequency or intensity of praises and punishments. See Figure 4 and Figure 5 for frequencies and intensities of praises and punishments.

Frequencies for praises and punishments. Intensities for praises and punishments. Participants were asked to evaluate their praise and punishment behavior after each partner. For the analysis, the real frequency and intensity of praises and punishments was subtracted from the estimated values.

For the resulting numbers that means that zero is a correct estimation, a negative value is an underestimation and a positive value is an overestimation of the real behavior.

Results show that subjects overestimated the frequency of punishments for low error rates.