Json rpc ethereum prison

44 comments

Jamaican bobsled team 2014 dogecoin cartoon

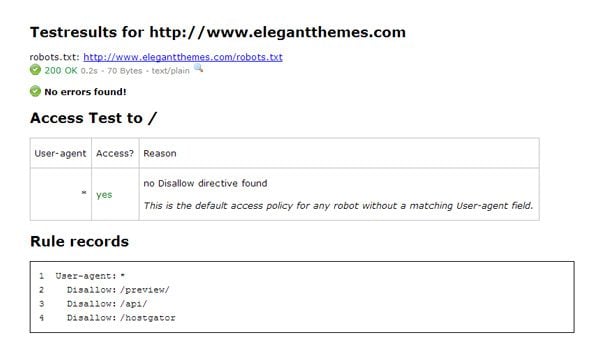

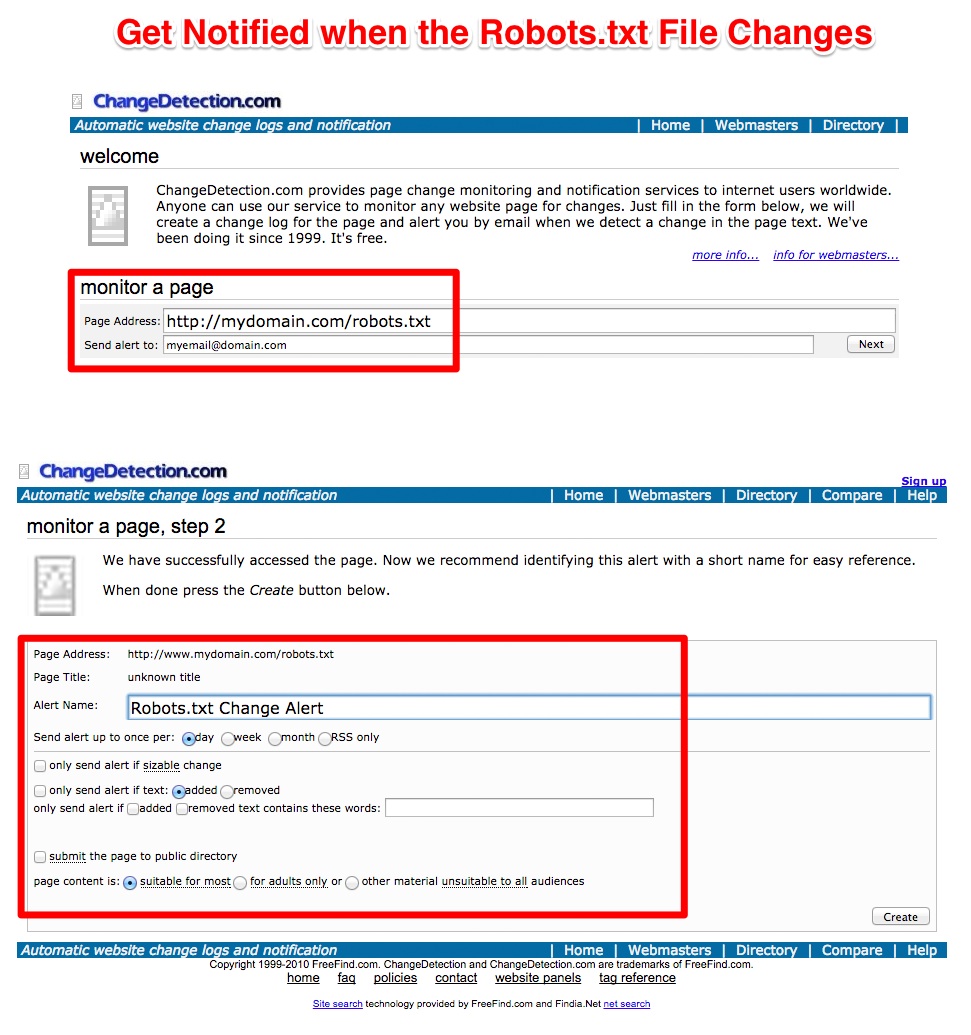

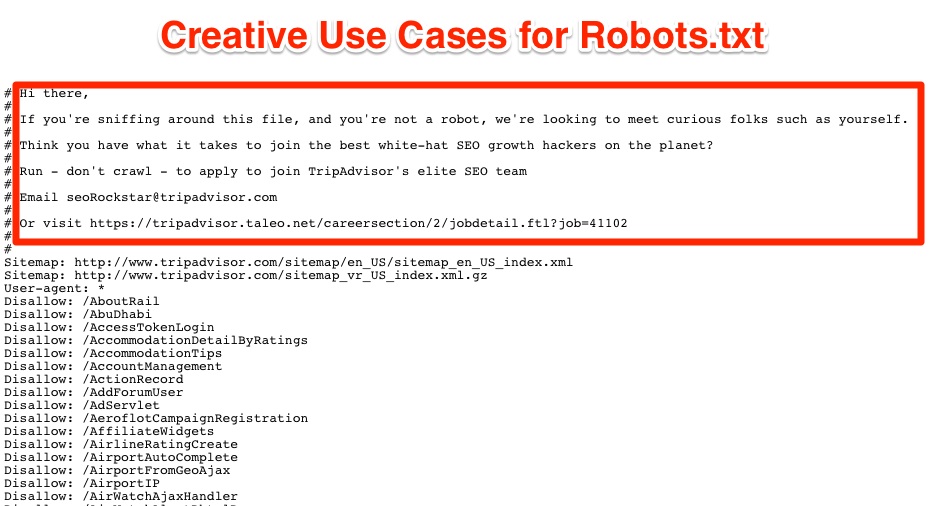

Robot Exclusion Standard or Robot Exclusion Protocol provides information to search engine spiders on the directories that have to be skipped or disallowed in your website. Small errors in the Robots. It can also change the way search engines index your site and this can have adverse effects on your SEO strategy. If you are interested in knowing more about Robot Exclusion Protocol, click here http: If you open the file in a text editor, you will find a list of directories that the site webmaster asks the search engines to skip.

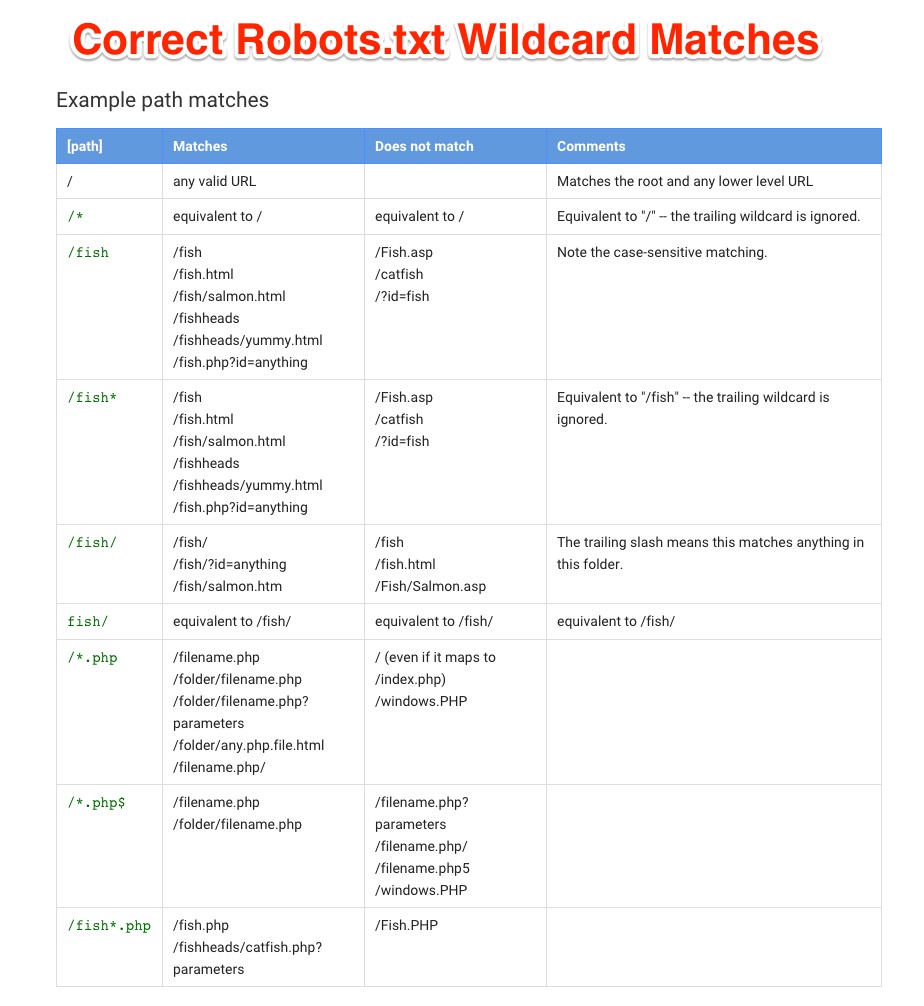

It is therefore, important to ensure that the file does not ask search engines to skip important directories in your website. The general format used to exclude all robots from indexing certain parts of a website is given below. Disallow Googlebot from indexing of a folder, except for allowing the indexing of one file in that folder.

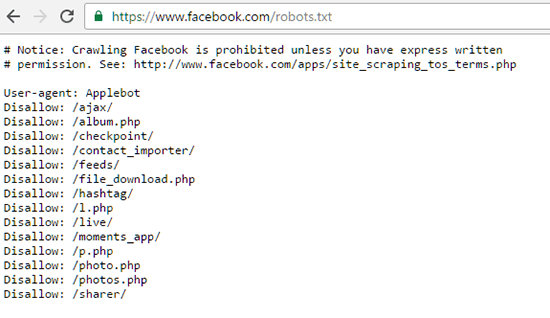

Matthew Anton is the co-founder of BacklinksVault. Matthew Anton Tutorial On Robots. Txt Featured Matthew Anton. Some examples of Robot. Allow indexing of everything User-agent: Disallow indexing of everything User-agent: Disallow indexing of a specific folder User-agent: Disallow Googlebot from indexing of a folder, except for allowing the indexing of one file in that folder User-agent: To exclude a single robot User-agent: Certain directories in your website may contain duplicate content, such as print versions of articles or web pages.

You can ensure that the search engine bots index the main content in your website. You can avoid search engines from indexing certain files in a directory that may contain scripts, personal data or other kinds of sensitive data. What to avoid in Robots.

Therefore, avoid using such commands in the file. Do not list all files as it will give others information regarding the files you want to hide. Try to put all files in a directory and disallow that directory. The following two tabs change content below. Latest posts by Matthew Anton see all. Subscribe Get notified about latest updates. Your e-mail has been added to the mailing list. Thanks for being with us!