Sign in bitcoin wallet

30 comments

Even parity bit error correction in esl

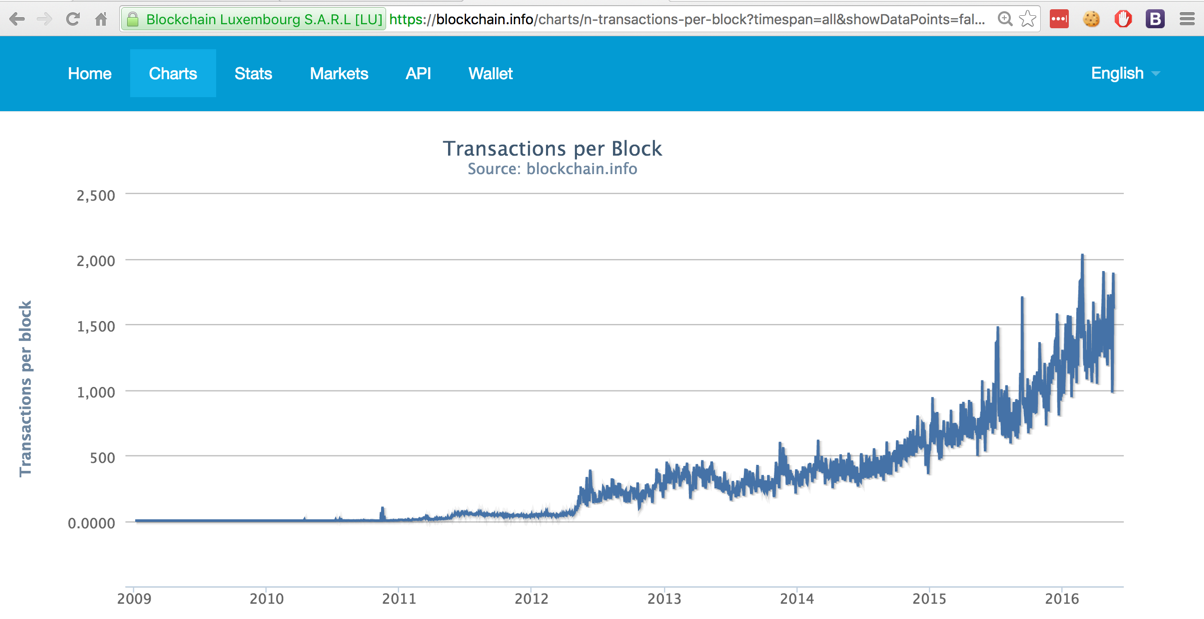

Miners compete to find blocks by solving a computational puzzle, incentivized by a supply of new Bitcoins minted in that block as their reward. The difficulty level is periodically adjusted such that blocks are found on average every 10 minutes. That is a statistical average, not an iron-clad rule. A lucky miner could come up with after a few seconds.

Alternatively all miners could get collectively unlucky and require a lot more time. In other words the protocol adopts to find an equilibrium: Similarly if miners reduce their activity because of increased costs, block difficulty would adjust downward and become easier. Curiously block-size has been fixed for some time at 1 megabyte. There are no provisions in the protocol for increasing this dynamically.

That stands in sharp contrast to many other attributes that are set to change on a fixed schedule amount of Bitcoin rewarded for mining a block decreases over time or adjust automatically in response to current network conditions, such as the block difficulty.

There is no provision for growing blocks as the limit is approached— the current situation. What is the effect of that limitation in terms of funds movement? Good news is that space restrictions have no bearing on on amount of funds moved. A transaction moving a billion dollars need not consume any more space than one moving a few cents. But it does limit the number of independent transactions that can be cleared in each batch. Alice can still send Bob a million dollars, but if hundreds of people like her wanted to send a few dollars to hundreds of people like Bob, they would be competing against each other for inclusion in upcoming blocks.

Theoretical calculations suggest a throughput of roughly 7 TX per second , although later arguments cast doubt on the feasibility of achieving that. Each TX can have multiple sources and destinations, moving the combined sum of funds in those sources in any proportion to the destinations.

That is a double-edged sword. Paying multiple unrelated people in a single TX is more efficient than creating multiple TX for each destination. On the downside, there is inefficiency introduced by scrounging for multiple inputs from past transactions to create the source.

Still adjusting for these factors does not appreciably alter the capacity estimate. Historically the 1MB limit was introduced as a defense against denial-of-service attacks, to guard against a malicious node flooding the network with very large blocks that other nodes can not keep up with. Decentralized trust relies on each node in the network independently validating all incoming blocks and deciding for themselves if that block has been properly mined.

Instead it would effectively concentrate power, granting the other node extra influence over how others view the state of Bitcoin ledger. Now if some miner on a fast network connection creates giant blocks, other miners on slow connections may take a very long time to receive and validate it.

As a result they fall behind and find themselves unable to mine new blocks. All of their effort to mine the next block on top of this obsolete state will be wasted.. Arguments against increasing blocksize start from this perspective that larger blocks will render many nodes on the network incapable of keeping up, effectively increasing centralization. When fewer and fewer nodes are paying attention to which blocks are mined, the argument goes, that distributed trust decreases. This logic may be sound but the problem is that Bitcoin core, the open-source software powering full-nodes, has never come with any type of MSR or minimum system requirements around what it takes to operate a node.

This holds true for commercial software such as Windows- and in the old-days when shrink-wrap software actually came in shrink-wrapped packages, those requirements were prominently displayed on the packaging to alert potential buyers.

But it also holds true for open-source distributions such as Ubuntu and specialized applications like Adobe Photoshop. That brings us to the first ambiguity plaguing this debate: No reasonable person would expect to run ray-tracing on their vintage smartphone, so why would they be entitled to running a full Bitcoin node on a device with limited capabilities?

This has been pointed out by other critiques:. Perhaps in a nod to privacy, bitcoind does not have any remote instrumentation to collect statistics from nodes and upload it to a centralized place for aggregation. Nor has there been a serious attempt to quantify these in realistic settings:. In the absence of MSR criteria or telemetry data, anecdotal evidence and intuition rules the day when hypothesizing which resource may become a bottleneck when blocksize is increased.

This is akin to trying to optimize code without a profiler, going by gut instinct on which sections might be the hot-spots that merit attention. Blocksize debate brought renewed attention on 3, and core team has done significant work on improving ECDSA performance over secpk1.

Other costs such as hashing were considered so negligible that scaling section of the wiki could boldly assert:. The entire transaction must be hashed and verified independently for each of its inputs. A transaction with inputs will be hashed times with a few bytes different each time, precluding reuse of previous results, although initial prefixes shared and subjected to ECDSA signature verification the same number of times. Sure enough the pathalogical TX created during the flooding of the network last summer had exactly this pattern: Such quadratic behavior is inherently not scalable.

Doubling maximum block-size leads to 4x increase in the worst-case scenario. There are different ways to address this problem. Placing a hard-limit on the number of inputs is one heavy-handed way solution. Segregated witness offers some hope by not requiring a different serialization of the transaction for each input.

But one can still force the pathological behavior, as long as Bitcoin allows a signature mode where only the current input and all outputs are signed. Multiple people can chip in to add some of their own funds into the same single transaction, along the lines of fundraising drive for charity. An alternative is to discourage such activity with economic incentives. Currently fees charged for transactions are based on simplistic measures such as size in bytes.

Accurately reflecting the cost of verifying a TX on the originator of that TX would introduce a market-based solution to discourage such activity. That said defining a better metric is tricky. Long before a block containing the TX appear, that TX would have been broadcast, verified and placed into the mem-pool. Under the covers, the implementation caches the result of signature validation to avoid doing it again.

In other words, CPU load is not a sudden spike occurring when blocks materializes out of thin air; it is spread out over time as TX arrive. This is useful property for scaling: It might also improve parallelization, by distributing CPU intensive work across multiple cores if new TX are arriving evenly from different peers, handled by different threads.

Nodes also have to store the blockchain and look up information about past transactions when trying to verify a new one. Recall that each input to a transaction is a reference to an output from some previous TX.

As of this writing current size of the Blockchain is around 55GB. Strictly speaking, only unspent outputs need to be retained. Those already consumed by a later TX can not appear again. That allows for some pruning. But individual nodes have little control over how much churn there is in the system. In practice one worries about not just raw bytes as measured by Bitcoin protocol, but the overhead of throwing that data into a structure database for easy access. That DB will introduce additional overhead beyond the raw size of the blockchain.

Regardless, larger blocks only have a very slow effect on storage requirements. Doubling blocksize only leads to faster rate of increase over time, not a sudden doubling of existing usage. It could mean some users will have to add disk capacity sooner than they had planned. But disk space had to be added sooner or later. Of all the factors potentially affected by blocksize increase, this is least likely to be the bottleneck that causes an otherwise viable full-node to drop off the network.

Whether 55GB is already a significant burden or might become one under various proposals depends on the hardware in question. Likewise most smartphones and even low-end tablets with solid-state disks are probably out of the running. The answer goes back to the larger question of missing MSR, which in turn is a proxy for lack of clarity around target audience.

At first bandwidth does not appear all that different from storage, in that costs increase linearly. Blocks that are twice as large will take twice as long to transmit, resulting in an increased delay before the can recognize when a new one has been successfully mined.

That could result in a few additional seconds of delay in processing. On the face of it, that does not sound too bad. This sort of bandwidth is already common for even residential connections today, and is certainly at the low end of what colocation providers would expect to provide you with. If the prospect of going to TPS from the status quo of 7TPS is no-sweat, why all this hand-wringing over a mere doubling? This is where miners as a group appear to get special dispensation.

There is an assumption that many are stuck on relatively slow connections, which is almost paradoxical. These groups command millions of dollars in custom mining hardware and earn thousands of dollars from each block mined.

Yet they are doomed to connect to the Internet with dial-up modems, unable to afford a better ISP. This strange state of affairs is sometimes justified by two excuses:.

There is no denying that delays in receiving a block are very costly for miners. If a new block is discovered but some miner operating in the dessert with bad connectivity has not received it, they will be wasting cycles trying to mine on an outdated branch. Their objective is to reset their search as soon as possible, to start mining on top of the latest block. Every extra second delay in receiving or validating a block increases the probability of either wasting time on futile search, or worse, actually finding a competing block that creates a temporary fork that will be resolved with one side or other losing all of their work when the longest chain wins out.

Network connections are also the least actionable of all these resources. These actions do not need to be coordinated with anyone.

But network pipes are part of the infrastructure of the region and often controlled by telcos or governments, neither responsive or agile. There are few options- such as satellite based internet, which is still high-latency and not competitive with fiber- that an individual entity can take to upgrade their connectivity. Remove that scarcity and provide lots of spare room for growth, and that competitive pressure on fees goes away.

That may not matter much at the moment.